How to Implement In-Memory Caching in .NET Console App Using C#

What is a Cache and Why is it Important?

A cache is a temporary storage area that holds frequently accessed data to improve performance. Instead of recalculating or fetching the same data repeatedly from a slower source (like a database or external API), the cache stores this data so it can be quickly retrieved. This reduces latency, minimizes server load, and enhances the overall efficiency of your application.

Types of Cache: First-Level and Second-Level

There are typically two levels of cache:

- First-Level Cache (In-Memory Cache):

This cache exists locally within the application’s memory (RAM). It is fast and easy to implement but limited by the available memory. Since it’s stored locally, when the application restarts or crashes, the cache is lost. In-memory cache is ideal for smaller datasets or temporary storage of frequently accessed items. - Second-Level Cache (Distributed Cache):

This cache is stored on a separate system or service (like Redis, Memcached, etc.). A distributed cache can be shared across multiple instances of your application, making it more scalable and fault-tolerant. It persists even if individual application instances are restarted. This is useful for larger datasets and scenarios where multiple application instances need to share cached data.

Use Cases for Caching

1. Frequent Database Updates

If your database modifications occur several times a day (e.g., three times), it makes sense to cache the data for a limited time. This reduces database load and improves response times, allowing your application to operate more efficiently.

2. ERP Data Retrieval

When dealing with data from ERP systems like SAP that updates only once a day (e.g., sales data), it’s unnecessary to query the ERP system every time. Caching this data in Redis allows your application to retrieve it quickly, enhancing performance while minimizing unnecessary queries.

3. APIs with Rate Limits

When interacting with third-party APIs that impose rate limits (e.g., social media APIs), caching the results can prevent you from exceeding those limits while still providing users with timely information.

4. Static Content

For content that doesn’t change often, such as configuration settings or lookup tables, caching can prevent unnecessary database queries, leading to faster access times and improved user experience.

Using In-Memory Cache in .NET: Using System.Runtime.Caching/MemoryCache and IMemoryCache

Caching is a powerful tool to improve the performance and scalability of an application by reducing the computational cost and time needed to retrieve or generate frequently accessed data. Instead of querying the database or external APIs repeatedly, the cache stores this data in memory, allowing for much faster access.

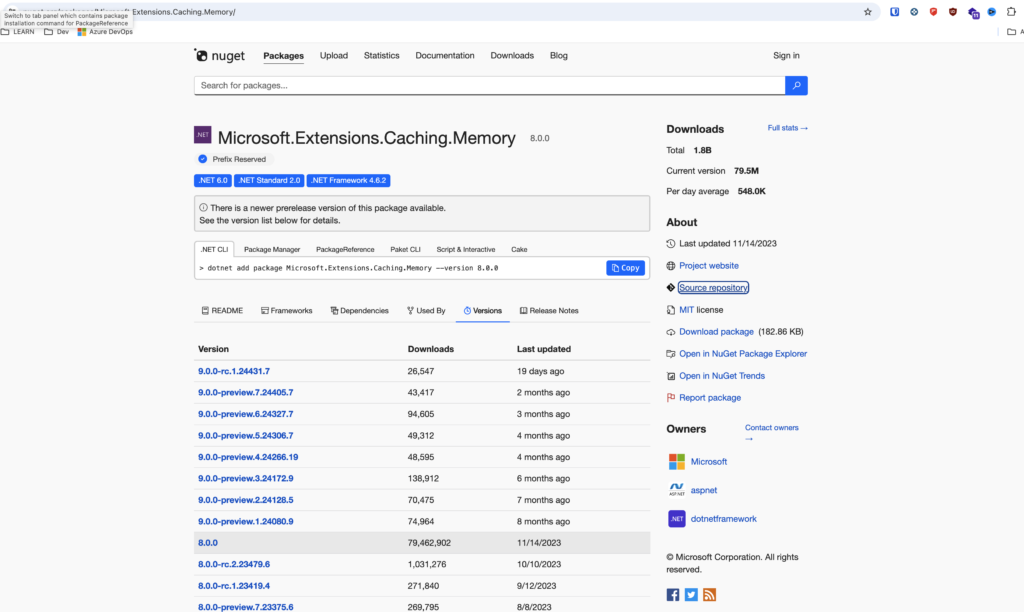

.NET offers two main options for managing in-memory caching: System.Runtime.Caching/MemoryCache and Microsoft.Extensions.Caching.Memory/IMemoryCache. Both libraries allow you to store data temporarily in memory to avoid costly operations like repeated database queries or complex calculations.

System.Runtime.Caching/MemoryCache

- System.Runtime.Caching/MemoryCache is a caching library that works with:

- .NET Standard 2.0 or later

- .NET Framework 4.5 or later

This option is often used in porting projects from older frameworks, like moving code from .NET Framework to more modern versions. However, for new .NET projects, IMemoryCache is generally preferred because it’s more lightweight and easy to use.

IMemoryCache (Preferred Option)

- IMemoryCache, available via the Microsoft.Extensions.Caching.Memory library, is recommended for most new .NET applications. While it’s integrated with ASP.NET Core, it also works perfectly in any .NET project (like console apps or desktop apps) that needs efficient caching.

In .NET, the most common way to implement in-memory caching is by using the IMemoryCache interface. This cache stores data in the server’s memory, which makes it ideal for small-to-medium applications or systems where you don’t need to share cached data across multiple servers.

Why Use IMemoryCache?

- Performance Boost:

Caching reduces the work required to generate or retrieve data that changes infrequently and is expensive to produce (like database calls or API requests). - Fast Access:

Since the cache is stored in the memory of the application, accessing cached data is much faster than recalculating or refetching it from the original source. - No External Dependencies:

Unlike distributed caches (which rely on external services like Redis), in-memory caching does not require any external infrastructure, making it easy to set up and use.

Cache Considerations and Best Practices

When using IMemoryCache, it’s important to follow a few guidelines to ensure that your cache operates efficiently and doesn’t introduce issues like stale data or memory overuse:

- Do Not Depend on Cached Data:

Applications should never rely on cached data to be always available. Cache is a tool to improve speed, but the original source should remain accessible if needed. - Set Expiration Policies:

Cached items should have appropriate expiration times to prevent memory overflow and ensure that outdated data is refreshed when necessary. You can set both absolute and sliding expiration times. - Use for Expensive or Slow Operations:

Cache only the results of expensive or time-consuming operations, such as database queries, API responses, or computation-heavy tasks. Avoid caching items that change frequently or are lightweight to retrieve.

IMemoryCache vs Distributed Cache

- IMemoryCache:

The in-memory cache stores data in the server’s RAM. It works well for single-server applications, but in distributed systems (like web farms with multiple servers), it’s less ideal. The cache is only accessible from the server that originally stored it, so if another server handles a request, the cached data won’t be available unless a sticky session configuration is used. - Distributed Cache (e.g., Redis):

In distributed systems, a distributed cache (like Redis) is preferred. It allows caching data in a central store, accessible by multiple servers. This avoids cache inconsistency issues when using multiple application instances.

Storing Cache Data: Key-Value Pairs

Both IMemoryCache and distributed caches store data as key-value pairs. This means you can store any object using a unique key, making retrieval straightforward. In-memory caches can store complex objects, whereas distributed caches are often limited to more basic types, like byte[], for scalability and network efficiency.

Example: How to Use IMemoryCache with Minimal API and .NET

Disclaimer: This example is purely for educational purposes. There are better ways to write code and applications that can optimize this example. Use this as a starting point for learning, but always strive to follow best practices and improve your implementation.

Prerequisites

Before starting, make sure you have the following installed:

- .NET SDK: Download and install the .NET SDK if you haven’t already.

- Visual Studio Code (VSCode): Install Visual Studio Code for a lightweight code editor.

- C# Extension for VSCode: Install the C# extension for VSCode to enable C# support.

Create a New Web Api solution using Visual Studio or by command line:

dotnet new web -n WepApiCachingInMemoryInstall Necessary Libraries:

dotnet add package Microsoft.Extensions.Caching.Memory

Create the User.cs Model:

public class User

{

public int Id { get; set; }

public string Name { get; set; } = string.Empty;

}Create the UserService.cs Service that contains alto the Interface:

public interface IUserService

{

Task<List<User>> GetUsers();

}

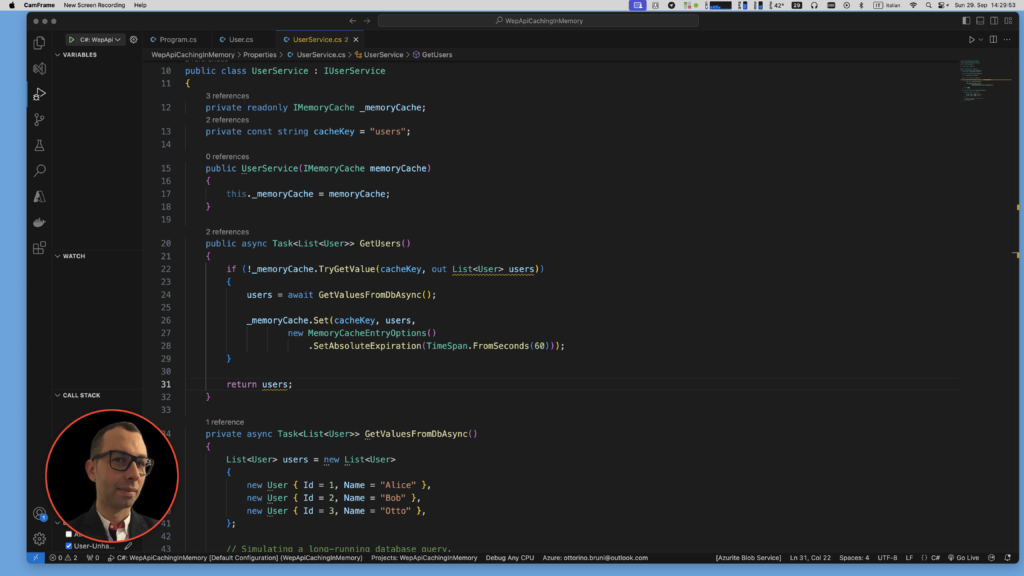

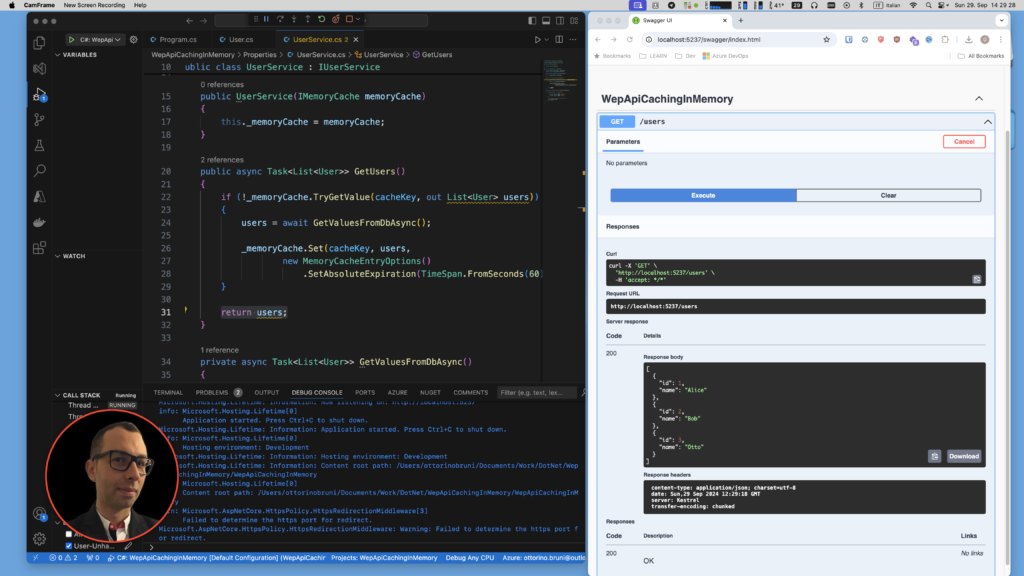

public class UserService : IUserService

{

private readonly IMemoryCache _memoryCache;

private const string cacheKey = "users";

public UserService(IMemoryCache memoryCache)

{

this._memoryCache = memoryCache;

}

public async Task<List<User>> GetUsers()

{

if (!_memoryCache.TryGetValue(cacheKey, out List<User> users))

{

users = await GetValuesFromDbAsync();

_memoryCache.Set(cacheKey, users,

new MemoryCacheEntryOptions()

.SetAbsoluteExpiration(TimeSpan.FromSeconds(60)));

}

return users;

}

private async Task<List<User>> GetValuesFromDbAsync()

{

List<User> users = new List<User>

{

new User { Id = 1, Name = "Alice" },

new User { Id = 2, Name = "Bob" },

new User { Id = 3, Name = "Otto" },

};

// Simulating a long-running database query.

await Task.Delay(2000);

return users;

}

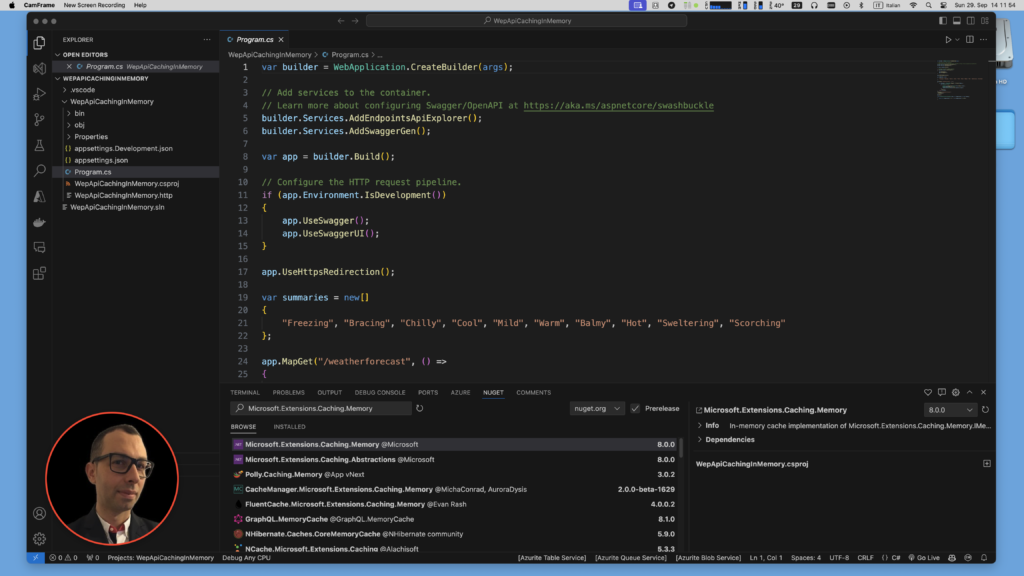

}We clean what we don’t need and update the Program.cs:

using WepApiCachingInMemory.Properties;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddMemoryCache(); // Register IMemoryCache

builder.Services.AddScoped<IUserService, UserService>(); // Register UserService

// Add services to the container.

// Learn more about configuring Swagger/OpenAPI at https://aka.ms/aspnetcore/swashbuckle

builder.Services.AddEndpointsApiExplorer();

builder.Services.AddSwaggerGen();

var app = builder.Build();

// Configure the HTTP request pipeline.

if (app.Environment.IsDevelopment())

{

app.UseSwagger();

app.UseSwaggerUI();

}

app.UseHttpsRedirection();

app.MapGet("/users", async (IUserService userService) =>

{

var users = await userService.GetUsers();

return Results.Ok(users);

})

.WithName("GetUsers")

.WithOpenApi();

app.Run();

Run Your Project:

When the API is called for the first time, it simulates a connection to the database to retrieve the requested data. This initial call can take some time, as it involves querying the database and processing the response. Once the data is retrieved, it is stored in the cache for faster access in subsequent requests.

On subsequent API calls, instead of querying the database again, the application checks the cache for the requested data. If the data is found in the cache, it is returned immediately, which is much faster than fetching it from the database. This cached data will remain available until it expires based on the expiration policies set earlier. After the cache expires, the next API call will again trigger a database connection to refresh the cached data.

Code Explanation

- UserService:

- The

UserServiceclass uses IMemoryCache to store and retrieve user data. - It simulates a database call that takes time (with

Task.Delay(2000)). - User data is cached for 60 seconds.

- The

- Minimal API:

- The code sets up a simple endpoint

/usersthat returns the list of users, first checking the cache and then retrieving the data from the simulated database if not found. - There’s no separate controller; everything is defined directly in the Program.cs file, making it compact and minimal.

- The code sets up a simple endpoint

Conclusion: Understanding and Using Caching in .NET

In summary, caching is a crucial mechanism for optimizing application performance and resource utilization. By storing frequently accessed data temporarily, caching reduces the need for repetitive data fetching from slower sources like databases or external APIs. This leads to faster response times, decreased server load, and an overall more efficient application.

By incorporating caching into your .NET applications, you can achieve significant performance enhancements with relatively little effort. With just a few lines of code and some thoughtful implementation, IMemoryCache can help you improve your application’s efficiency and user experience. Start leveraging caching in your projects today and enjoy the benefits of faster data access and reduced server load!

If you think your friends or network would find this article useful, please consider sharing it with them. Your support is greatly appreciated.

Thanks for reading!

🚀 Discover CodeSwissKnife, your all-in-one, offline toolkit for developers!

Click to explore CodeSwissKnife 👉